The Institute of the Information Society of the Ludovika University of Public Service regularly carries out surveys of domestic opinion in Hungary about matters in the field of information society. Each year, the surveys include recurring questions that are suitable for longitudinal research as well as introducing new themes.

This research report focuses on one such new topic, the attitude of the public towards artificial intelligence. The authors were curious to find out about the general attitude of Hungarians towards this new technological development, which is increasingly present in the public discourse, and how they feel about its use and risks in certain fields of application. Telephone surveys were carried out from November to December 2023, on a research sample which is representative of the Hungarian population by age, sex, qualification, and residence type.

1. Most say AI may be convenient, but we might lose control over it

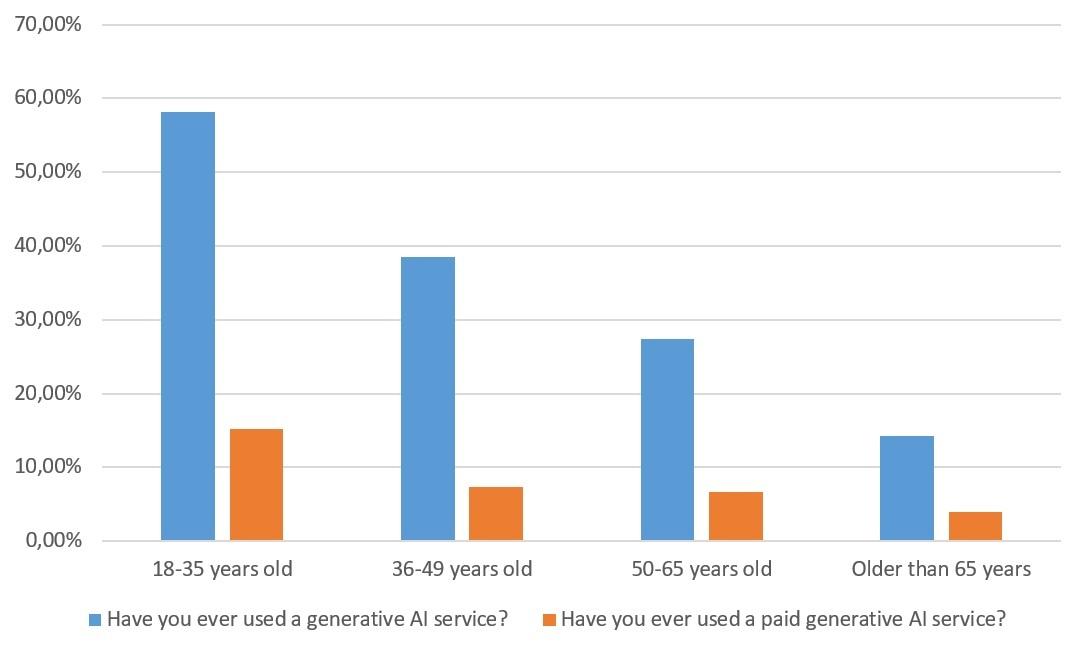

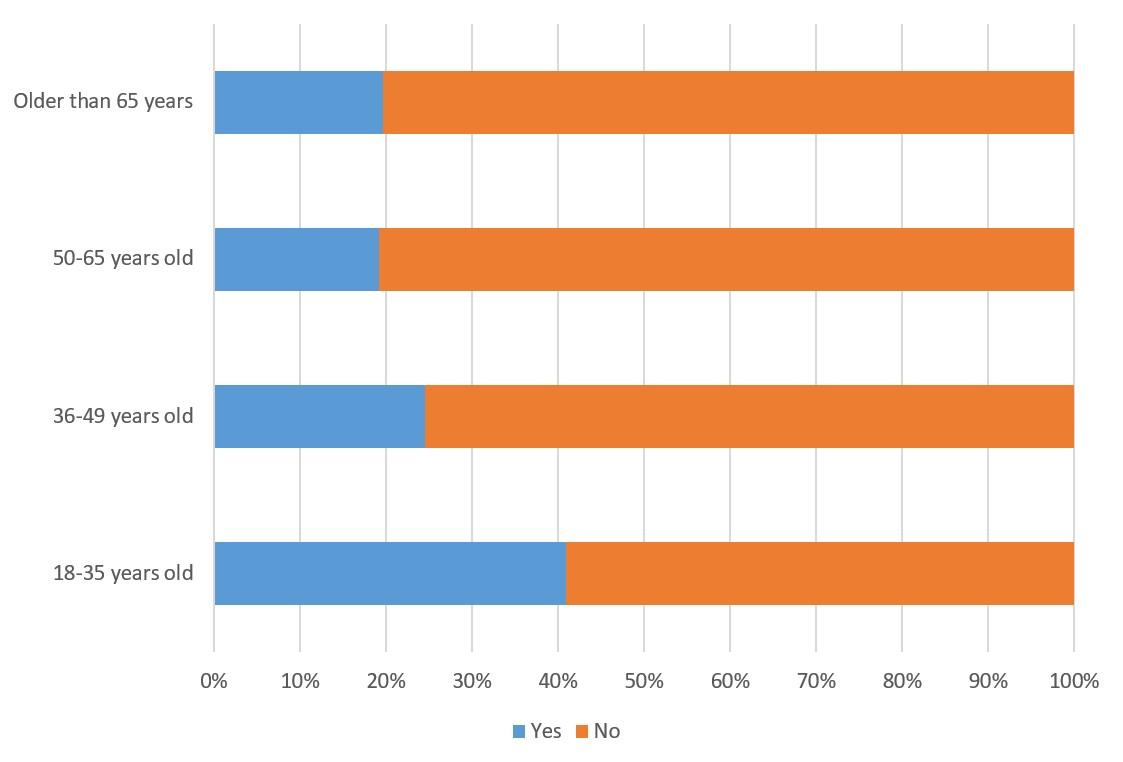

As regards general usage patterns, 34% of respondents have already used a generative AI service, but there is a significant generational gap: while 58% of young people have used a generative AI service, only 14% of people over 65 have. It is also apparent that free AI services are the most popular type at the moment, although 8% of all respondents, i.e. approximately 700,000 Hungarian adults, have already used a subscription service. A generational gap can also be observed in this respect: younger people are four times more likely to have used paid services (15%) than the elderly (4%).

The use of generative AI services in Hungarian society

When asked about robots endowed with human traits, an almost unanimous majority felt that robots, unlike humans, have no soul or emotions (94%) and cannot love or suffer (93-93%). Slightly fewer thought that robots cannot even understand a joke (79%). It is striking that, although robots are not attributed with the emotional, sentimental and spiritual traits of flesh and blood humans, 50% of the respondents disagreed with the claim that humans can be trusted while robots cannot.

A question that regularly emerges in the public debate is whether computers will be able to become self-aware in the future. 47% of respondents do not think it will be possible, while 43% think it could happen. However, a clear majority, 59% (against 36.5%) think that humans might lose control over machines in the future.

20% of those surveyed believed that robots may eventually become equals to humans in society, while 78% of them did not think this is possible.

An overwhelming majority of the respondents, 83%, believe that artificial intelligence can make our lives more comfortable overall. However, for the time being, the majority (50%) perceived the changes in their environment and their lives being caused by AI as more of a challenge, and fewer (41%) saw it as a benefit.

2. Accepted as an expert, not a partner

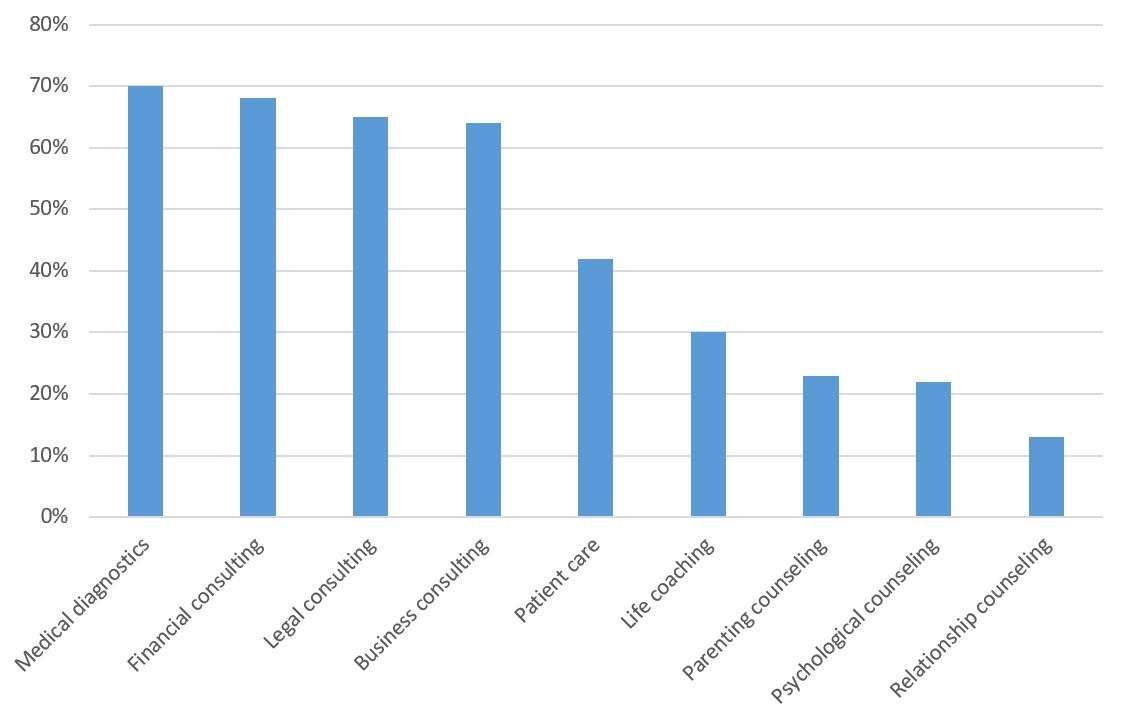

The emergence of artificial intelligence, whereby machines are becoming capable of managing increasingly complex processes, has recently focused attention on the question of which areas of our lives we should, or can, allow technology to control and influence certain processes. We sought to gain information on this issue by asking people about their perceptions of the specific areas of application of AI. The respondents stated that they would apply AI in the various domains, in the proportions shown in the following figure:

In what areas would you use artificial intelligence?

From the above figures, it seems that the respondents would prefer to turn to their fellow human beings rather than to robots in those areas of life where they need to express themselves on matters that affect the essential emotional and spiritual aspects of human personality. While the people surveyed had more confidence in AI to help them navigate in areas that are primarily professional in nature (finance, law, business), they have much less confidence in machines when it comes to navigating in domains that are reliant on interpersonal human connections. People’s trust in AI is demonstrated by it being consulted for expert advice in important domains of our lives, however.

While 40% of respondents would not object to having a machine-generated human image on screen as a news presenter or anchor, 57% would insist on having a human feature in such programmes.

Similarly to the results in previous figures, the proportion of people who would like algorithms to recommend news items and videos for them to read and watch is 41%, while 57% of the respondents indicated that they would accept software offering news and information that expresses opinions differing from those they have already consumed.

3. Artificial intelligence in the field of law: recognising the role of machine learning, but keeping the final decision in human hands

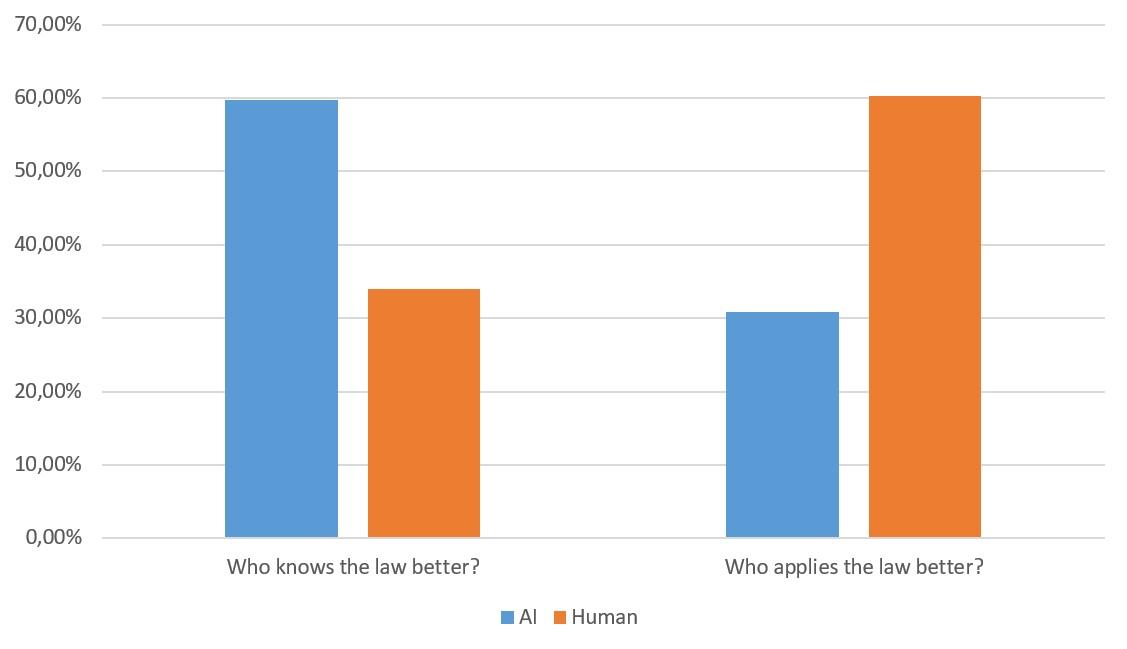

The survey included questions about the relationship between artificial intelligence and the law as a specific domain of application, given the significant interest in the decision-making capabilities of algorithms and their potential regulatory function. On the one hand, 60% of respondents (compared to 34%) expressed the opinion that a piece of software that covers the whole body of the law is better able to comprehend the law than a human lawyer. However, 60% of respondents (compared to 31%) expressed the opinion that a human lawyer can apply the law with greater accuracy than software that incorporates all the laws. A mere 17.6% of survey respondents indicated their willingness to entrust artificial intelligence with the responsibility of making judicial decisions in court proceedings, while 78% agreed with the assertion that humans should always be the ones to deliver decisions. While the majority of the respondents viewed AI as having more substantial legal knowledge, they nevertheless prefer a human-centric approach to individual case decisions.

Who is better at knowing and applying the law?

A mere 15% of respondents would accept the appointment of a robo-judge (i.e. a piece of AI software) to adjudicate a case, even if it could reach a decision within a day and without the possibility of appeal. Conversely, 78% would prefer to entrust the case to a human judge, who could deliver a decision within a year and with the possibility of appeal. Finally, only 12% expressed confidence in the ability of AI to formulate legislation, while an overwhelming 84% asserted that law-making should always remain an exclusively human domain of human expertise.

The general attitude towards the relationship between AI and law is that of respondents believing AI software to be more familiar with the law (60% versus 34%) and less fallible than humans at recalling it accurately (52% versus 39%), yet twice as many believe that a flesh-and-blood human would be better at applying it (60% versus 31%).

4. AI in education: a useful aid, but not a replacement for the teacher

Our study examined the issue of AI in education both from the perspective of education in general as well as in various specific educational contexts, including the humanities and the sciences alike. The results of the study indicated that 65% of the Hungarian population does not consider it acceptable for students to use generative AI to write assessed assignments in the future.

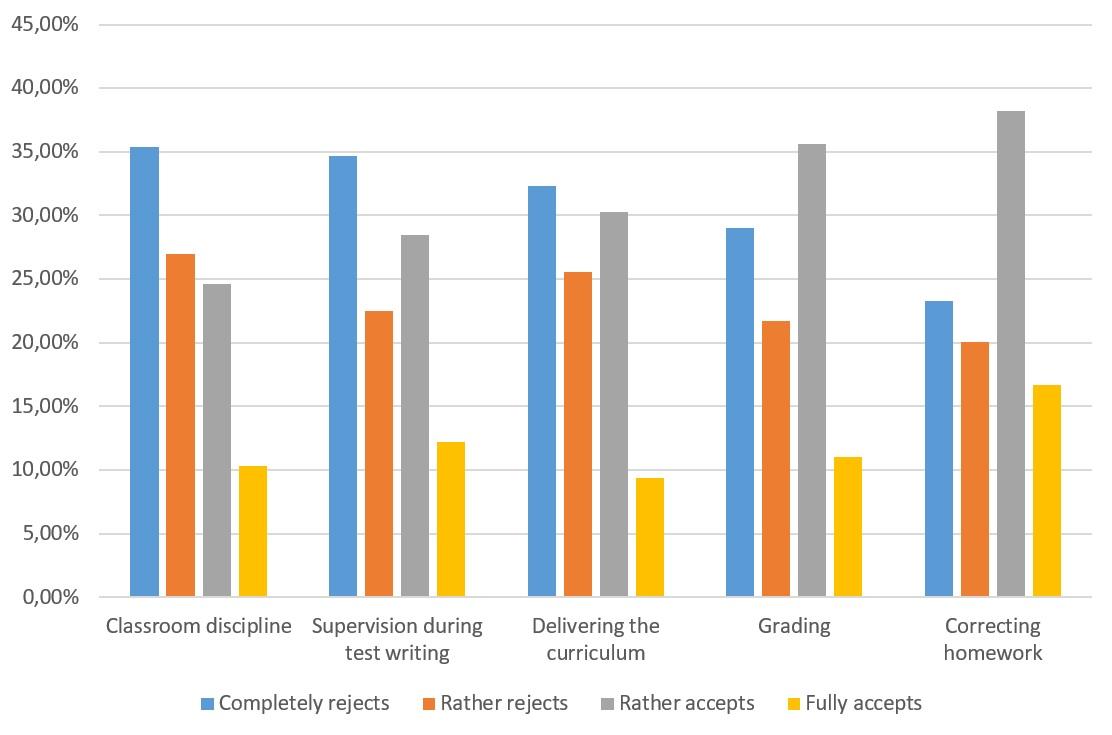

On the issue of whether teaching material should be presented by an interactive AI instead of a teacher, 60% rejected the idea for Hungarian language lessons, while for maths classes, the rate was similar (58%), a difference which is within the range of statistical error. The difference between the respondents’ views of applying AI in different types of classes is more pronounced in the context of grading: 60.5% of respondents disapproved of the notion of employing automatic tests for grading in Hungarian classes, while the disapproval rate was lower in mathematics classes, at 51%. Concerning the correction of homework, the rejection rate was still lower, with 51% of respondents rejecting the prospect of having Hungarian homework checked and corrected by AI, in comparison to 43% for maths homework.

In which areas of education do you accept or reject the use of artificial intelligence?

It appears that as we move further away from the traditional classroom setting, there is an increased acceptance of AI in education amongst the public, accompanied by a concurrent decline in rejection of its use.

5. Human and machine creative works: the majority want recognisable machine involvement and stronger protection for human creations

Artificial intelligence applications that produce different kinds of content (text, images, sound, etc.) are posing new questions about the way we judge creative work and the works created. Our research tried to find out where and how respondents perceive the difference between works created by humans alone and those created by machines.

55% of respondents consider a picture that has been altered by the artist using software (e.g. Photoshop) to be less valuable than an entirely original image, while 41% do not consider this difference to be relevant to the value of the picture. 58% of respondents (compared to 39%) fear that the inclusion of AI in the creation of works may lead to a reduction in the quality of cultural content.

The overwhelming majority of respondents, 85%, would prefer to protect the work of human creativity rather than that of machines. 80% of those surveyed believed that it is important to be able to tell that a work was created by artificial intelligence rather than a human being.

The respondents were asked whether they consider it important that various genres of text are written by a person rather than a machine. The proportions who felt it was, for each text type, are as follows: thesis: 83%, poetry: 79%, novels: 77.5%, prayers: 74%, longer analytical articles: 68%, newspaper articles: 49.5%.

In connection with the previous two topics, the data revealed that only a minority of respondents (49.5%) would insist that short articles be written by people rather than by machines, while in contrast over two-thirds of the respondents (68%) felt that a human should produce the content for a longer analytical piece. These data also demonstrate the tendency that, in areas where the human factor plays a significant role and adds substantial value, the majority consider the contribution of humans to be indispensable.

Only 25% of respondents would consider content created using generative AI as their own, while 71% would not.

Do you take ownership of the content created with the help of generative AI?

6. Artificial intelligence at work: seen as an opportunity, not a threat by the majority

A key question in the discourse on the social impact of artificial intelligence is in which areas and to what extent the latest machine technologies can more effectively support or even replace human work.

22% of respondents reported having used artificial intelligence to help them with their work, while 77% indicated never having done so. Among those who have used AI, 40% use it on a weekly basis.

61.5% of respondents see AI as more of an opportunity than a threat in their own work, while 23% regard it as more of a threat. The attitudes of different age groups towards AI are interesting: while 75% of the younger age group (18-35 years old) believe that AI machines could make a useful contribution to their work, the figure is only 50% for the over 65s. Within the professional environment, the figure stands at 60% compared to 27%.

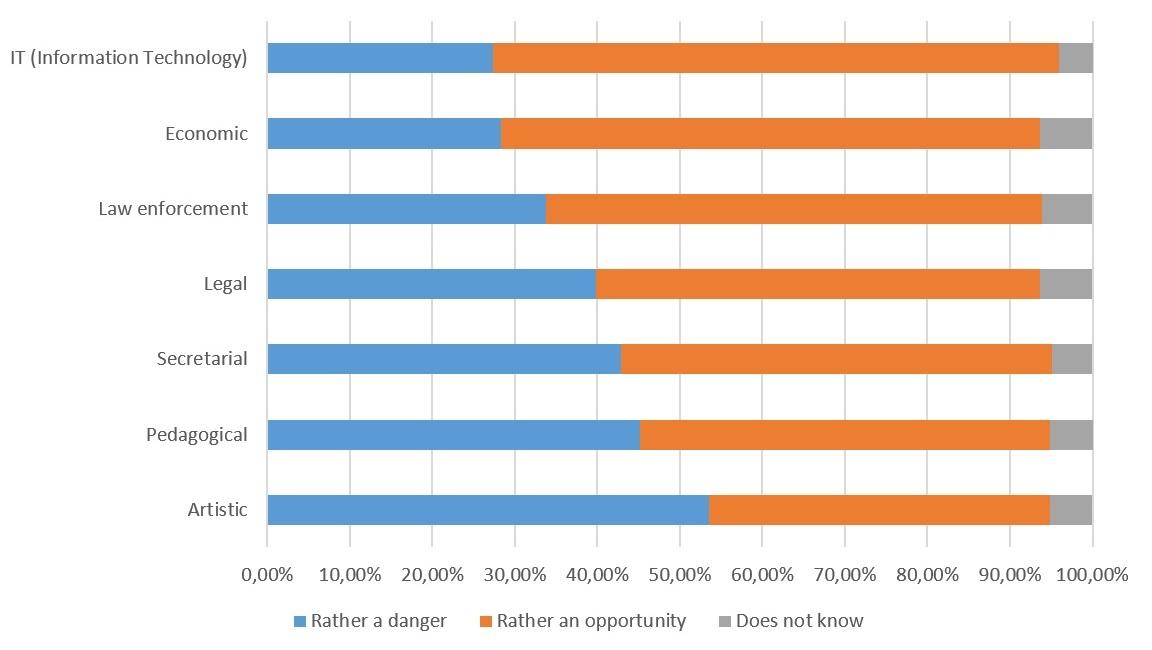

In which jobs do you see the spread of artificial intelligence as an advantage, and in which jobs do you see it as a threat?